Artificial intelligence (AI) is rapidly transforming our world, impacting everything from healthcare to transportation to finance. While AI offers immense potential for progress, it also raises critical ethical questions that we must address. As AI technology continues to evolve at an unprecedented pace, navigating the crossroads of AI and ethics has become increasingly crucial.

This article explores the complex interplay between AI and ethics, examining the key ethical considerations that must guide the development and deployment of AI systems. We will delve into crucial areas such as algorithmic bias, data privacy, and the potential for job displacement. Ultimately, our goal is to foster a responsible and equitable future for AI, one where technology serves humanity while upholding fundamental ethical values.

The Rise of AI: Opportunities and Challenges

Artificial Intelligence (AI) is rapidly transforming various aspects of our lives, offering unprecedented opportunities while also presenting significant challenges.

One of the most prominent opportunities is the potential for AI to boost productivity across industries. AI-powered tools can automate repetitive tasks, freeing up human workers to focus on more complex and creative endeavors. This can lead to increased efficiency, reduced costs, and improved quality of work. Furthermore, AI can unlock new possibilities in areas like healthcare, finance, and education. For example, AI algorithms can analyze vast amounts of data to identify potential diseases early, provide personalized financial advice, or tailor educational materials to individual learning styles.

However, the rise of AI also brings about several concerns. A major challenge is the potential for job displacement. As AI becomes more sophisticated, it could automate tasks currently performed by human workers, leading to job losses in certain sectors. Ethical concerns surround the use of AI in areas like facial recognition, autonomous weapons systems, and algorithmic decision-making. It is crucial to ensure that AI systems are developed and deployed responsibly, avoiding bias and discrimination.

The future of AI is intertwined with the choices we make today. By embracing the opportunities while addressing the challenges, we can harness the power of AI to create a more prosperous and equitable society. This requires collaboration between policymakers, researchers, industry leaders, and the public to establish clear ethical guidelines and regulations for the development and deployment of AI.

Ethical Considerations in AI Development and Deployment

As artificial intelligence (AI) rapidly evolves, transforming industries and shaping our daily lives, it’s crucial to navigate the crossroads of AI and ethics. Building a responsible technological future demands a proactive approach to addressing ethical considerations in AI development and deployment. These considerations encompass various facets, ensuring that AI systems are developed and used responsibly, fairly, and ethically.

One paramount concern is bias. AI algorithms are trained on vast datasets, and if these datasets contain biases, the resulting AI systems may perpetuate and amplify existing societal inequalities. It’s imperative to identify and mitigate biases in training data to ensure that AI systems treat all individuals fairly.

Another ethical challenge is transparency and explainability. Many AI systems operate as “black boxes,” making it difficult to understand how they reach their decisions. This lack of transparency can lead to mistrust and raise questions about accountability. Developing AI systems that are transparent and explainable is vital to foster trust and ensure that decisions made by AI can be understood and challenged.

The ethical implications of data privacy and security are also significant. AI systems often rely on vast amounts of personal data. It’s essential to implement robust data privacy and security measures to protect sensitive information and prevent misuse. This includes obtaining informed consent and ensuring data is used responsibly and ethically.

Furthermore, the impact of AI on employment warrants careful consideration. While AI can automate tasks, it can also create new opportunities. It’s important to anticipate and mitigate potential job displacement while harnessing AI’s potential to enhance productivity and create new jobs.

Finally, the potential for AI misuse must be addressed. AI systems could be used for malicious purposes, such as spreading misinformation or automating harmful activities. Developing safeguards and establishing ethical guidelines for AI development and deployment is crucial to prevent its misuse.

In conclusion, ethical considerations are fundamental to building a responsible AI future. By proactively addressing issues of bias, transparency, data privacy, employment, and misuse, we can ensure that AI is developed and deployed ethically, fostering innovation while upholding human values and principles.

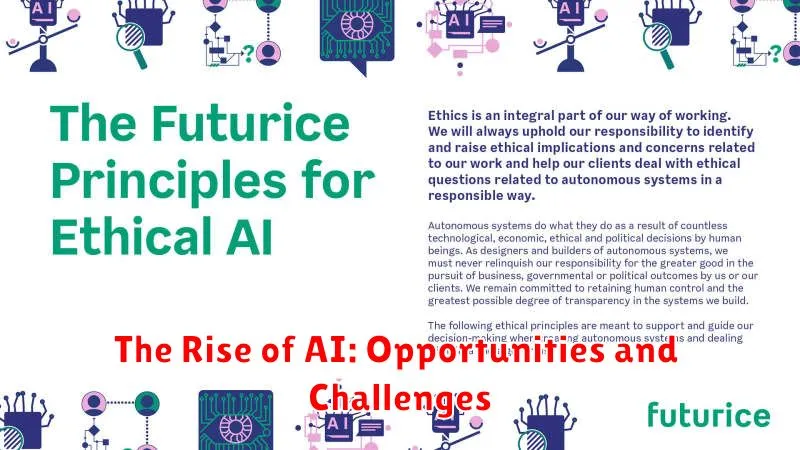

Bias in AI Algorithms: Causes, Consequences, and Mitigation

Artificial intelligence (AI) is rapidly transforming our world, impacting various aspects of our lives. However, the promise of AI comes with a critical challenge: the potential for bias in its algorithms. This bias can have significant consequences, perpetuating existing inequalities and leading to unfair outcomes. Understanding the causes, consequences, and mitigation strategies for bias in AI algorithms is crucial for building a responsible and ethical technological future.

Causes of Bias in AI Algorithms

Bias in AI algorithms arises from various sources, including:

- Biased Data: AI algorithms are trained on vast datasets, and if these datasets reflect existing societal biases, the algorithms will inevitably learn and perpetuate those biases.

- Algorithmic Design: The design choices made during algorithm development can introduce bias, such as selecting specific features that inadvertently favor certain groups over others.

- Human Bias: Developers, data scientists, and other individuals involved in AI development can unknowingly introduce their own biases into the algorithms.

Consequences of Bias in AI Algorithms

The consequences of biased AI algorithms can be far-reaching and harmful:

- Discrimination and Inequality: Biased algorithms can lead to unfair treatment of individuals or groups based on protected characteristics like race, gender, or socioeconomic status.

- Erosion of Trust: Biased AI systems can undermine public trust in technology and its ability to be fair and impartial.

- Reinforcement of Existing Inequalities: Biased AI algorithms can exacerbate existing social inequalities by perpetuating unfair outcomes.

Mitigation Strategies for Bias in AI Algorithms

To address bias in AI algorithms, it is essential to implement mitigation strategies throughout the AI development lifecycle:

- Data Collection and Preprocessing: Ensuring diverse and representative data is crucial. Techniques like data augmentation and bias detection can help mitigate bias in the training data.

- Algorithm Design and Evaluation: Employing fairness-aware algorithms and evaluating algorithms for bias are essential steps in mitigating bias.

- Human Oversight and Accountability: Establishing robust systems for human oversight and accountability is critical to ensure responsible AI development and deployment.

Conclusion

Bias in AI algorithms is a serious challenge that requires careful attention and proactive measures. By understanding the causes and consequences of bias and implementing mitigation strategies, we can work towards building an AI ecosystem that is fair, equitable, and beneficial for all.

Privacy Concerns in the Age of AI

The rapid advancement of Artificial Intelligence (AI) has ushered in a new era of technological possibilities, revolutionizing industries and shaping our lives in profound ways. While AI offers immense potential for progress, it also presents significant ethical considerations, particularly in the realm of privacy.

AI systems rely on vast amounts of data to learn and perform their tasks. This data often includes sensitive personal information, such as our location, browsing history, and even our health records. The collection and use of such data raise serious privacy concerns, as it can be exploited for malicious purposes, leading to identity theft, discrimination, and even social manipulation.

One of the key concerns is the potential for data breaches. AI systems are vulnerable to hacking and cyberattacks, which could expose our personal data to unauthorized access. Additionally, the increasing use of facial recognition technology raises concerns about mass surveillance and the erosion of our right to anonymity.

Furthermore, AI algorithms can be biased, perpetuating existing societal inequalities. If training data contains discriminatory biases, the AI system will likely reflect and amplify these biases, leading to unfair or discriminatory outcomes. For example, biased facial recognition algorithms have been shown to misidentify people of color more often than their white counterparts.

Addressing these privacy concerns is crucial to ensure a responsible and ethical development of AI. It requires a multifaceted approach, including:

- Robust data privacy regulations that protect individuals’ data and limit the collection and use of sensitive information.

- Transparency and accountability in AI systems, allowing individuals to understand how their data is being used and to hold companies accountable for data breaches.

- Development of AI algorithms that are fair, unbiased, and respectful of individual privacy.

- Education and awareness to empower individuals to understand their privacy rights and take steps to protect their data.

By prioritizing privacy and ethical considerations, we can harness the transformative power of AI while safeguarding individual rights and creating a future where technology empowers and benefits everyone.

The Impact of AI on Employment and the Workforce

The advent of Artificial Intelligence (AI) is rapidly reshaping the landscape of employment and the workforce. While AI holds immense potential to automate tasks, improve efficiency, and create new opportunities, it also presents significant challenges and raises crucial questions about the future of work.

One of the most prominent impacts of AI is the potential for job displacement. As AI systems become more sophisticated, they can automate tasks that were previously performed by human workers. This raises concerns about job security and the potential for mass unemployment in certain sectors. For instance, repetitive and rule-based tasks, such as data entry, manufacturing, and customer service, are particularly susceptible to automation.

However, AI is not simply about replacing jobs. It also creates new opportunities in fields related to AI development, data science, and AI-related services. As businesses adopt AI technologies, demand for professionals with skills in these areas will likely increase. This shift necessitates investment in education and training programs to equip individuals with the necessary skills to thrive in the AI-driven economy.

Beyond job creation and displacement, AI has the potential to enhance human capabilities. By automating routine tasks, AI can free up human workers to focus on more complex, creative, and strategic activities. This shift in focus can lead to increased productivity, innovation, and job satisfaction.

Furthermore, AI can play a critical role in addressing societal challenges. From healthcare diagnostics to environmental monitoring, AI applications have the potential to improve human well-being and contribute to a more sustainable future.

The impact of AI on employment and the workforce is multifaceted. While it presents challenges, it also offers immense opportunities for growth, innovation, and societal advancement. As we navigate the crossroads of AI and ethics, it is crucial to adopt a responsible approach that prioritizes the well-being of workers, fosters inclusivity, and ensures that the benefits of AI are shared by all.

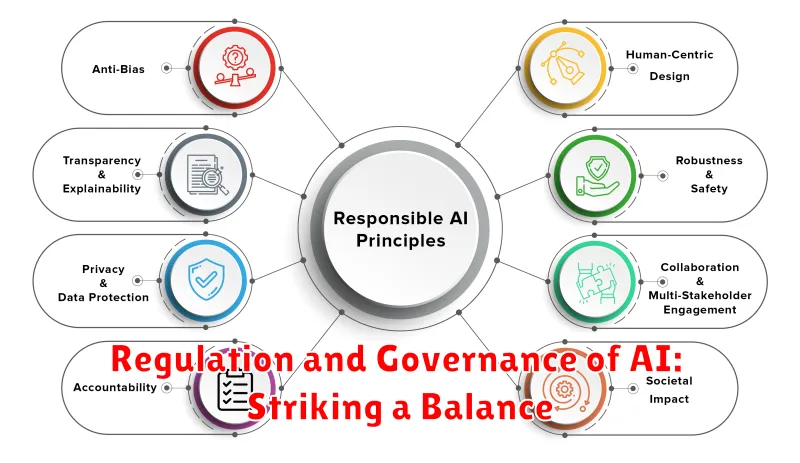

Regulation and Governance of AI: Striking a Balance

The rapid advancement of artificial intelligence (AI) presents both immense opportunities and significant challenges. While AI has the potential to revolutionize industries, improve lives, and solve complex problems, its development and deployment raise critical ethical concerns. To harness the power of AI responsibly, a delicate balance must be struck between fostering innovation and ensuring ethical and societal well-being. This necessitates a robust framework for regulating and governing AI, one that prioritizes accountability, transparency, and fairness.

Regulation plays a crucial role in setting boundaries and establishing clear guidelines for the development and use of AI. It can address specific risks, such as algorithmic bias, data privacy violations, and potential job displacement. Regulations can also promote transparency by requiring companies to disclose how AI systems are developed and deployed, fostering public trust and accountability. However, it is essential to avoid stifling innovation through overly restrictive regulations. Striking a balance requires a nuanced approach that allows for flexibility and adaptability as AI technologies continue to evolve.

Governance extends beyond specific regulations and encompasses broader principles and frameworks for managing AI. It involves establishing mechanisms for oversight, ensuring ethical considerations are integrated into AI development, and promoting responsible research practices. Effective governance requires collaboration among stakeholders, including governments, industry leaders, researchers, and civil society. This collaborative approach fosters a shared understanding of the ethical implications of AI and helps to develop guidelines that align with societal values.

The key to navigating the crossroads of AI and ethics lies in finding the right balance between innovation and regulation. A comprehensive framework that incorporates both legal and ethical considerations is crucial for ensuring a responsible and beneficial future for AI. By fostering dialogue, establishing clear guidelines, and promoting collaboration, we can unlock the transformative potential of AI while mitigating its risks.

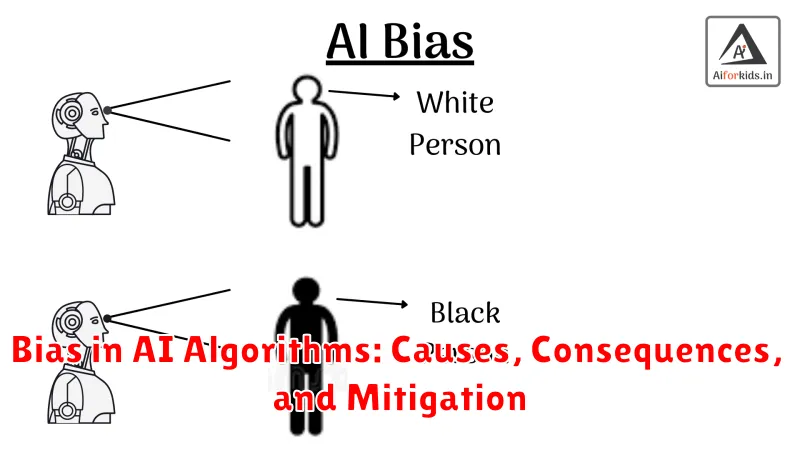

Promoting Responsible AI: Best Practices and Frameworks

As Artificial Intelligence (AI) rapidly evolves and becomes increasingly integrated into our lives, it’s crucial to ensure its responsible development and deployment. This requires a proactive approach that prioritizes ethical considerations and fosters trust in AI systems. This article delves into best practices and frameworks for promoting responsible AI, navigating the complex intersection of technology and ethics.

Key Principles for Responsible AI

At the core of responsible AI development lies a set of fundamental principles that guide ethical decision-making and minimize potential harm. These principles include:

- Fairness: AI systems should be designed to treat all individuals fairly and avoid bias or discrimination.

- Transparency: The workings of AI systems should be transparent and understandable, allowing for accountability and trust.

- Privacy: User data should be protected and used responsibly, adhering to privacy regulations and respecting individual rights.

- Safety and Security: AI systems should be developed and deployed with robust safety measures to prevent unintended consequences or malicious use.

- Accountability: Clear lines of responsibility should be established for the development, deployment, and potential impact of AI systems.

Best Practices for Responsible AI

Implementing these principles requires a practical approach that involves various best practices throughout the AI lifecycle:

- Data Governance: Ensuring data quality, addressing biases, and implementing robust data privacy practices.

- Algorithmic Transparency: Making model decisions explainable and interpretable, allowing for analysis and identification of potential issues.

- Human-in-the-Loop: Incorporating human oversight and feedback mechanisms to ensure responsible AI decisions.

- Continuous Monitoring and Evaluation: Regularly assessing AI systems for fairness, bias, and effectiveness, and making necessary adjustments.

- Stakeholder Engagement: Involving diverse perspectives, including experts, users, and the public, to ensure ethical and responsible development.

Frameworks for Responsible AI

Several frameworks have emerged to provide guidance for responsible AI development. Some prominent examples include:

- The AI Now Institute’s “Ten Principles for Responsible AI”: A comprehensive set of principles covering fairness, transparency, privacy, and accountability.

- The European Union’s General Data Protection Regulation (GDPR): Provides a framework for data protection and privacy, applicable to AI systems using personal data.

- The Partnership on AI’s “Best Practices for AI”: A collaborative effort by leading AI companies and organizations to establish responsible AI development practices.

Conclusion

Promoting responsible AI is a shared responsibility, requiring collaboration among technology developers, policymakers, and society as a whole. By embracing best practices and leveraging frameworks, we can navigate the complex ethical landscape of AI and build a technological future that benefits everyone.

Building a Future Where AI Benefits Humanity

Artificial intelligence (AI) is rapidly transforming our world, offering unprecedented opportunities for progress across various industries. However, alongside this transformative potential lies a critical need to ensure that AI development and deployment are guided by ethical considerations. Navigating the crossroads of AI and ethics is paramount to building a responsible technological future where AI benefits humanity.

To create a future where AI serves as a force for good, we must prioritize the following principles:

- Fairness and Non-discrimination: AI systems should be designed and deployed in a way that treats all individuals fairly and equitably, avoiding biases that could perpetuate social inequalities.

- Transparency and Explainability: The decision-making processes of AI systems should be transparent and understandable, enabling users to comprehend how outcomes are reached.

- Privacy and Data Security: The collection, storage, and use of personal data should be conducted with the utmost respect for individual privacy and data security.

- Accountability and Oversight: Mechanisms for accountability and oversight need to be established to ensure that AI systems are used responsibly and ethically.

- Human-Centered Design: AI systems should be designed to augment and enhance human capabilities, not to replace them.

By embracing these principles, we can foster a future where AI empowers individuals, promotes social good, and contributes to a more just and equitable world. We must proactively engage in dialogue, research, and policy development to shape the ethical landscape of AI.

The crossroads of AI and ethics present a unique challenge and an extraordinary opportunity. Let us work together to ensure that AI empowers humanity and leads us towards a brighter future.