The world of artificial intelligence (AI) is rapidly evolving, with new frameworks and tools emerging constantly. As a developer, staying ahead of the curve is essential to leverage the power of AI for innovative solutions. In 2024, several AI frameworks stand out as leading contenders, offering powerful capabilities and a wide range of applications. From deep learning to natural language processing, these frameworks empower developers to build cutting-edge AI systems that can revolutionize industries and transform our daily lives.

This comprehensive guide will delve into the most popular and promising AI frameworks available in 2024, providing insights into their key features, strengths, and limitations. We’ll explore how these frameworks can be used to tackle real-world challenges, highlighting their potential impact on various domains. Whether you’re a seasoned AI developer or just starting your journey, this guide will equip you with the knowledge and resources you need to choose the right framework for your projects and stay ahead in the dynamic AI landscape.

The Rise of AI Frameworks in Software Development

In today’s rapidly evolving technological landscape, artificial intelligence (AI) is revolutionizing software development. AI frameworks are emerging as powerful tools, empowering developers to build intelligent applications that can automate tasks, enhance user experiences, and drive innovation. These frameworks provide pre-built components, libraries, and tools that streamline the development process, making AI accessible to a wider range of developers.

One of the key factors driving the rise of AI frameworks is the increasing demand for intelligent applications. Businesses are constantly looking for ways to improve efficiency, personalize experiences, and gain a competitive edge. AI frameworks enable developers to build intelligent solutions that address these needs, from chatbots and recommendation systems to image recognition and natural language processing applications.

Another important factor is the availability of vast amounts of data. AI frameworks leverage machine learning algorithms and deep learning models to analyze large datasets and extract valuable insights. Developers can use these frameworks to build applications that learn from data, adapt to changing environments, and provide personalized recommendations.

The rise of AI frameworks has led to a significant shift in software development methodologies. Developers are now focused on building AI-powered applications that can learn, adapt, and interact with users in intelligent ways. Frameworks like TensorFlow, PyTorch, and Keras have become popular choices for developers looking to build AI applications.

AI frameworks are transforming the software development landscape by providing developers with the tools and resources they need to build intelligent applications. The future of software development is intertwined with AI, and these frameworks are playing a pivotal role in shaping this future.

Key Considerations for Choosing an AI Framework

In the rapidly evolving landscape of artificial intelligence (AI), choosing the right framework is crucial for successful development. With numerous options available, it’s essential to consider several key factors to make an informed decision.

1. Ease of Use and Learning Curve: A framework should be intuitive and easy to learn, especially for developers new to AI. Look for frameworks with comprehensive documentation, tutorials, and active community support.

2. Flexibility and Scalability: The chosen framework should be flexible enough to adapt to different AI tasks and scalable to handle large datasets and complex models. Consider the framework’s support for various machine learning algorithms and its ability to leverage cloud resources.

3. Performance and Efficiency: AI frameworks should offer optimal performance and efficiency, minimizing training and inference time. Look for frameworks with optimized libraries and efficient execution environments.

4. Community Support and Ecosystem: A strong community and ecosystem provide access to resources, libraries, and pre-trained models. Active forums, tutorials, and community-driven projects can significantly accelerate development.

5. Industry Adoption and Use Cases: Consider the framework’s popularity and adoption in the industry. Frameworks with proven track records and widespread use in real-world applications often offer greater stability and reliability.

6. Hardware Compatibility: Ensure the framework is compatible with your hardware resources, including CPUs, GPUs, and specialized AI accelerators. Some frameworks offer optimized support for specific hardware platforms.

By carefully evaluating these considerations, developers can choose an AI framework that aligns with their project requirements and facilitates efficient and effective AI development.

Top AI Frameworks for Developers in 2024

The landscape of artificial intelligence (AI) is rapidly evolving, and it can be overwhelming for developers to keep up with the latest trends and technologies. However, with the right AI frameworks, you can efficiently build and deploy cutting-edge AI solutions.

In 2024, several frameworks stand out for their power, versatility, and ease of use. These frameworks cater to a wide range of AI tasks, from natural language processing (NLP) and computer vision to deep learning and machine learning.

Top AI Frameworks for Developers in 2024

-

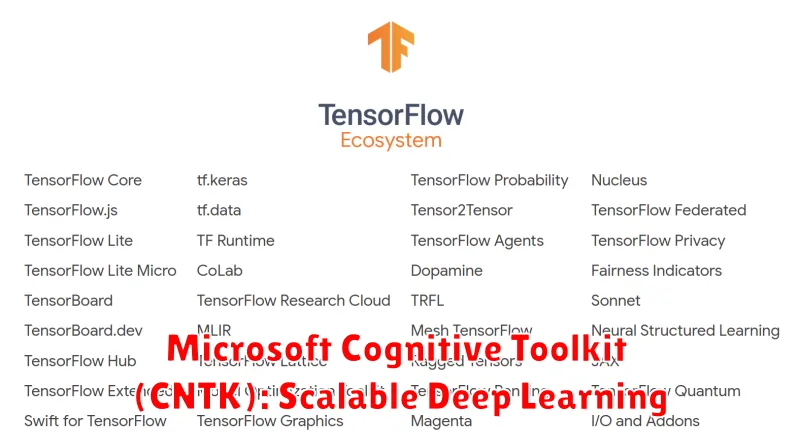

TensorFlow

Developed by Google, TensorFlow is a popular open-source framework that offers a comprehensive ecosystem for building and deploying machine learning and deep learning models. It provides a flexible architecture that supports various platforms and devices, making it suitable for a wide range of applications.

-

PyTorch

PyTorch is another widely used open-source framework known for its dynamic computation graphs and intuitive Python interface. It’s favored by researchers and developers for its flexibility and ease of use in deep learning research and development.

-

Keras

Keras is a high-level API that simplifies the process of building and training deep learning models. It runs on top of TensorFlow or Theano, providing a user-friendly interface for developers who want to get started with deep learning quickly.

-

Scikit-learn

Scikit-learn is a powerful framework for machine learning in Python. It offers a wide range of algorithms for classification, regression, clustering, and dimensionality reduction, making it an excellent choice for general-purpose machine learning tasks.

-

Microsoft Cognitive Toolkit (CNTK)

CNTK is a deep learning framework developed by Microsoft. It’s known for its high performance and scalability, making it suitable for large-scale deep learning projects.

These frameworks offer a solid foundation for building innovative AI applications. Explore their features and choose the one that best suits your specific needs and projects. As the AI landscape continues to evolve, it’s essential to stay informed about emerging frameworks and technologies to stay ahead of the curve.

TensorFlow: Powering Deep Learning Projects

In the dynamic world of artificial intelligence (AI), deep learning stands out as a transformative technology, driving innovation across industries. At the heart of this revolution lies TensorFlow, a powerful open-source machine learning framework developed by Google.

TensorFlow is renowned for its versatility and scalability, making it an ideal choice for a wide range of deep learning projects. It provides a comprehensive suite of tools and libraries, enabling developers to build, train, and deploy sophisticated models with ease.

One of TensorFlow’s key strengths is its support for diverse hardware platforms, including CPUs, GPUs, and TPUs. This flexibility allows developers to optimize their models for performance and efficiency, regardless of the computational resources available.

Furthermore, TensorFlow boasts a vibrant community of developers and researchers, fostering collaboration and knowledge sharing. This active ecosystem provides valuable support and resources, ensuring that users have access to the latest advancements and best practices.

TensorFlow’s extensive documentation and tutorials make it accessible to developers of all skill levels. Whether you’re a seasoned AI professional or a beginner exploring the world of deep learning, TensorFlow offers the resources you need to succeed.

From image recognition and natural language processing to time series analysis and reinforcement learning, TensorFlow has proven its capabilities across various domains. Its applications are constantly expanding, shaping the future of AI and transforming industries worldwide.

PyTorch: Flexibility and Dynamic Computation Graphs

In the realm of deep learning, PyTorch stands out as a highly versatile framework favored by many developers. Its strength lies in its ability to construct and manipulate dynamic computation graphs, offering flexibility and intuitive control over the learning process. Unlike static frameworks where the computation graph is fixed beforehand, PyTorch allows for on-the-fly adjustments, enabling dynamic model architectures and adaptive learning strategies.

The flexibility of PyTorch’s dynamic computation graphs empowers researchers and engineers to:

- Experiment with new architectures: PyTorch’s dynamic nature makes it easy to experiment with novel neural network structures without the need to define the entire graph in advance. This allows for rapid prototyping and exploration of different designs.

- Implement complex control flow: PyTorch’s ability to handle loops and conditional statements within the computation graph enables the implementation of intricate learning algorithms with dynamic decision-making processes.

- Develop custom training loops: PyTorch grants developers complete control over the training process, allowing them to tailor the optimization strategy to specific needs. This includes fine-grained control over data loading, parameter updates, and evaluation metrics.

By combining the benefits of dynamic computation graphs with its user-friendly API and extensive ecosystem, PyTorch empowers developers to build innovative and sophisticated deep learning models with unparalleled flexibility and control.

Keras: User-Friendly Deep Learning Library

In the realm of artificial intelligence (AI), deep learning has emerged as a transformative technology, revolutionizing various fields, from image recognition to natural language processing. To harness the power of deep learning, developers rely on robust frameworks that streamline the development process. Among these frameworks, Keras stands out as a user-friendly and versatile library that empowers developers of all levels to build and deploy deep learning models effortlessly.

Keras’s primary strength lies in its simplicity and ease of use. The library boasts a concise and intuitive API that makes building and experimenting with deep learning models a breeze. Unlike other frameworks that require extensive configuration, Keras allows developers to focus on the core aspects of their models, such as the architecture, layers, and activation functions. This user-friendly approach accelerates development cycles and fosters innovation.

Another key advantage of Keras is its modularity. It provides a wide range of pre-built layers and functionalities that can be combined and customized to create sophisticated deep learning architectures. This modular design enables developers to readily integrate different components, such as convolutional neural networks (CNNs) for image classification, recurrent neural networks (RNNs) for natural language processing, and generative adversarial networks (GANs) for image generation. By leveraging these pre-built modules, developers can significantly reduce development time and effort.

Furthermore, Keras is highly portable and supports various backend engines, including TensorFlow, Theano, and CNTK. This flexibility allows developers to leverage the best resources and optimize their models for specific hardware environments. Whether you are working on a local machine or a cloud-based platform, Keras provides the necessary tools for efficient and scalable deep learning.

In conclusion, Keras stands as a user-friendly and versatile deep learning library that simplifies the development process for both beginners and experienced developers. Its intuitive API, modular design, and support for multiple backends make it a valuable asset for building and deploying powerful AI applications. Whether you are exploring deep learning for the first time or seeking to accelerate your existing projects, Keras offers a comprehensive and streamlined framework to empower your AI journey.

Scikit-learn: Machine Learning Essentials

In the realm of machine learning, Scikit-learn stands as a cornerstone library, empowering developers to build and deploy a wide array of machine learning models. Its simplicity, versatility, and comprehensive documentation make it an ideal starting point for both beginners and experienced practitioners. Let’s delve into the core features that make Scikit-learn indispensable.

Ease of Use: One of Scikit-learn’s greatest strengths lies in its intuitive and user-friendly interface. The library provides a consistent API across its diverse algorithms, making it easy to switch between different models without substantial code changes. This streamlined approach fosters rapid development and experimentation.

Comprehensive Algorithms: Scikit-learn boasts an extensive collection of supervised, unsupervised, and reinforcement learning algorithms. From classic methods like linear regression and decision trees to cutting-edge techniques like support vector machines and random forests, the library offers a vast arsenal to tackle diverse machine learning tasks.

Data Preprocessing: Before feeding data into a machine learning model, it often requires careful preprocessing. Scikit-learn provides a wealth of tools for data cleaning, transformation, and feature engineering, ensuring that your models operate on clean and relevant data.

Model Evaluation: Evaluating the performance of a machine learning model is crucial. Scikit-learn offers a suite of metrics and tools for assessing model accuracy, precision, recall, and other key performance indicators. This enables you to select the most effective models for your specific applications.

Integration: Scikit-learn seamlessly integrates with other popular libraries in the Python data science ecosystem, such as NumPy, Pandas, and Matplotlib. This interoperability allows you to build end-to-end machine learning pipelines with ease.

In conclusion, Scikit-learn is a powerful and versatile library that empowers developers to build, train, and deploy machine learning models with remarkable efficiency. Its ease of use, comprehensive algorithms, data preprocessing capabilities, robust model evaluation tools, and seamless integration make it a fundamental component of any machine learning workflow.

Microsoft Cognitive Toolkit (CNTK): Scalable Deep Learning

In the rapidly evolving landscape of artificial intelligence (AI), deep learning has emerged as a transformative technology, driving advancements in various domains. To harness the power of deep learning, developers rely on robust and efficient frameworks that can handle complex computations and large datasets. Among the leading contenders, the Microsoft Cognitive Toolkit (CNTK) stands out as a powerful and scalable deep learning framework designed for high-performance training and deployment.

CNTK’s strength lies in its ability to scale seamlessly across multiple GPUs and CPUs, enabling the training of deep neural networks on massive datasets. Its distributed training capabilities allow developers to leverage the computational power of clusters, significantly reducing training time and improving efficiency. This scalability is crucial for tasks such as natural language processing (NLP), computer vision, and speech recognition, where large-scale datasets and complex models are prevalent.

Beyond its scalability, CNTK offers several other compelling features:

- Flexibility: CNTK supports a wide range of deep learning models, including feed-forward neural networks, convolutional neural networks (CNNs), recurrent neural networks (RNNs), and long short-term memory (LSTM) networks.

- Ease of Use: CNTK provides a user-friendly API, making it relatively easy to define and train deep learning models. Its intuitive syntax and comprehensive documentation facilitate rapid prototyping and development.

- Performance: CNTK is known for its high performance, often outperforming other frameworks in terms of training speed and accuracy. Its optimized algorithms and efficient memory management contribute to its efficiency.

- Community Support: Although less widely adopted than TensorFlow or PyTorch, CNTK has a growing community of developers and researchers who contribute to its development and provide support.

While CNTK has made significant contributions to the field of deep learning, its adoption has slowed down in recent years. This is mainly attributed to the rise of more popular frameworks like TensorFlow and PyTorch, which have gained wider community support and a more extensive ecosystem of tools and libraries. Nevertheless, CNTK remains a valuable option for developers seeking a highly scalable and efficient deep learning framework, particularly for tasks that demand high performance and large-scale training.

Caffe: Convolutional Neural Networks for Vision

Caffe is an open-source deep learning framework that was developed at the University of California, Berkeley. It’s specifically designed for high-performance image classification and object detection, making it a go-to choice for computer vision tasks.

Key features of Caffe include:

- High Speed: Caffe is optimized for speed and efficiency, enabling you to train and deploy models quickly.

- Model Zoo: It comes with a rich library of pre-trained models that you can use directly, saving you time and effort.

- Modular Design: Caffe has a modular design, making it easy to customize and extend for specific applications.

- Python Interface: Caffe provides a Python interface, making it easy to integrate with other popular Python libraries.

Where Caffe shines:

Caffe is particularly strong in applications like:

- Image Classification: Recognizing and categorizing images, such as classifying different types of animals or objects.

- Object Detection: Locating and identifying objects within an image, such as detecting cars or pedestrians in a scene.

- Image Segmentation: Dividing an image into different regions based on their content.

In conclusion:

Caffe remains a relevant framework for computer vision tasks, especially those that prioritize speed and efficiency. Its modularity and strong performance make it a valuable tool for researchers and developers working in this area.

Deeplearning4j: Java-Based Deep Learning Platform

Deeplearning4j (DL4J) is a powerful open-source deep learning library for the Java Virtual Machine (JVM). It’s designed for distributed computing and is a great choice for enterprise-level applications that require high performance and scalability.

Here are some of the key features of DL4J:

- Distributed computing: DL4J can leverage multiple CPUs and GPUs to accelerate training and inference.

- Production-ready: It’s built for stability and reliability, making it suitable for real-world applications.

- Java ecosystem integration: DL4J works seamlessly with other popular Java libraries and frameworks.

- Support for various deep learning architectures: It supports popular architectures like convolutional neural networks (CNNs), recurrent neural networks (RNNs), and long short-term memory (LSTM) networks.

- Active community: A vibrant community of developers contributes to the library and provides support.

Use cases for DL4J:

- Natural language processing (NLP)

- Image recognition

- Time series analysis

- Fraud detection

- Recommendation systems

If you’re looking for a robust, enterprise-grade deep learning platform built for the Java ecosystem, DL4J is a compelling choice. Its focus on performance, scalability, and production readiness makes it ideal for large-scale applications.

Theano: Foundational Python Library for Deep Learning

While Theano is no longer actively developed, it played a pivotal role in shaping the landscape of deep learning. This Python library, released in 2007, revolutionized the field with its efficient computation of mathematical expressions, particularly those involving multidimensional arrays.

Theano’s core strengths lay in its ability to:

- Optimize and compile mathematical expressions for efficient execution on GPUs and CPUs.

- Handle symbolic differentiation, allowing for automatic gradient calculation – a cornerstone of neural network training.

- Enable efficient computation of complex mathematical functions, particularly those involving large datasets.

Although Theano is now officially in maintenance mode, its impact on the deep learning community is undeniable. It paved the way for other frameworks like TensorFlow, Keras, and PyTorch, which continue to leverage its foundational principles.

Apache MXNet: Scalability and Performance Optimization

Apache MXNet is a popular open-source deep learning framework known for its scalability and performance optimization capabilities. It excels at handling large-scale datasets and complex models, making it suitable for demanding applications like natural language processing, computer vision, and reinforcement learning.

Scalability

MXNet’s scalability stems from its efficient distributed training capabilities. It allows training models across multiple machines (CPUs and GPUs), leveraging the power of clusters for faster convergence and handling massive datasets. This distributed training is achieved through MXNet’s parameter server architecture, which enables efficient communication and synchronization between workers.

Performance Optimization

MXNet prioritizes performance by offering a variety of optimization techniques:

- Automatic differentiation for efficient gradient calculation.

- Optimized kernels for GPU and CPU computation, leveraging libraries like CUDA and OpenMP.

- Memory management features for efficient resource utilization.

- Symbol-based programming allowing for graph optimization and efficient execution.

Benefits

MXNet’s scalability and performance optimization provide numerous benefits:

- Faster training times for complex models and large datasets.

- Improved model accuracy through efficient optimization.

- Reduced training costs by utilizing distributed resources.

- Support for various hardware platforms including CPUs, GPUs, and specialized hardware.

Conclusion

Apache MXNet’s focus on scalability and performance optimization makes it a strong contender for deep learning projects demanding high performance and efficiency. Its distributed training capabilities, optimized kernels, and various optimization features empower developers to tackle complex models and large-scale datasets effectively.

Choosing the Right Framework for Your AI Project

In the rapidly evolving world of artificial intelligence (AI), choosing the right framework is crucial for a successful project. With numerous options available, each with its strengths and limitations, it can be overwhelming to navigate. This developer’s guide will provide insights into the best AI frameworks in 2024, empowering you to make informed decisions.

First and foremost, consider your project’s specific needs and goals. Are you working on computer vision, natural language processing, or reinforcement learning? What is the desired level of complexity and scalability? Understanding these aspects will help you narrow down the choices.

Next, assess your team’s expertise and experience. Some frameworks require advanced knowledge and programming skills, while others offer simpler interfaces for beginners. Choosing a framework that aligns with your team’s capabilities will ensure smooth development and minimize learning curves.

Additionally, consider the framework’s community support and documentation. A large and active community can provide valuable assistance, troubleshooting, and shared resources. Well-maintained documentation will streamline learning and implementation.

Finally, take into account the framework’s performance, scalability, and integration capabilities. Choose a framework that can handle your project’s demands and seamlessly integrate with existing systems or tools.

By carefully evaluating these factors, you can select the most suitable AI framework for your project, ensuring efficient development and achieving desired outcomes. Remember, the right framework can be a game-changer, propelling your AI endeavors forward.

Exploring Emerging AI Frameworks and Trends

The world of artificial intelligence (AI) is evolving rapidly, with new frameworks and trends emerging constantly. As a developer, keeping up with these advancements is crucial to stay ahead of the curve. This article will explore some of the most exciting emerging AI frameworks and trends that are shaping the future of AI in 2024.

Generative AI Frameworks

Generative AI, which focuses on creating new content, is a major area of innovation. Frameworks like Stable Diffusion and DALL-E 2 are making headlines with their ability to generate stunning images from text prompts. In the realm of text generation, GPT-4 has pushed the boundaries of language models, showcasing remarkable capabilities in text summarization, translation, and even code generation.

Edge AI and Decentralized AI

The rise of edge AI is enabling AI to operate directly on devices like smartphones and IoT sensors, reducing latency and dependence on cloud infrastructure. This trend is complemented by decentralized AI, which aims to democratize AI by allowing users to train and run models on their own devices without relying on centralized servers. These frameworks are paving the way for more privacy-focused and distributed AI applications.

AI for Sustainability

The intersection of AI and sustainability is gaining momentum. Frameworks are being developed to optimize energy consumption, reduce waste, and improve resource management. For instance, AI-powered systems are being used to monitor environmental conditions, predict natural disasters, and design more efficient energy grids. This focus on ethical and responsible AI development is crucial for addressing global challenges.

Conclusion

The AI landscape is dynamic and continuously evolving. By staying informed about emerging AI frameworks and trends, developers can leverage these advancements to build innovative and impactful AI applications. Whether it’s exploring the possibilities of generative AI, adopting edge AI for real-time applications, or contributing to sustainable solutions, the future of AI holds tremendous potential for developers and society as a whole.