In today’s rapidly evolving technological landscape, Artificial Intelligence (AI) and Machine Learning (ML) have emerged as transformative forces, revolutionizing industries across the globe. From automating mundane tasks to unlocking groundbreaking insights, AI and ML are empowering businesses and individuals alike to achieve unprecedented levels of efficiency, innovation, and growth. This comprehensive guide delves into the captivating world of AI and ML, providing you with a comprehensive overview of essential tools that can propel your endeavors to new heights.

Whether you’re a seasoned data scientist or a curious beginner, this guide will equip you with the knowledge and resources to harness the power of AI and ML. We’ll explore a wide range of tools, including open-source libraries, cloud platforms, and specialized software, each designed to streamline specific tasks and empower you to build intelligent applications. By understanding the capabilities and limitations of these tools, you can make informed decisions about which ones best suit your specific needs and goals. Get ready to embark on a transformative journey as we unlock the potential of AI and ML together.

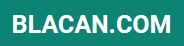

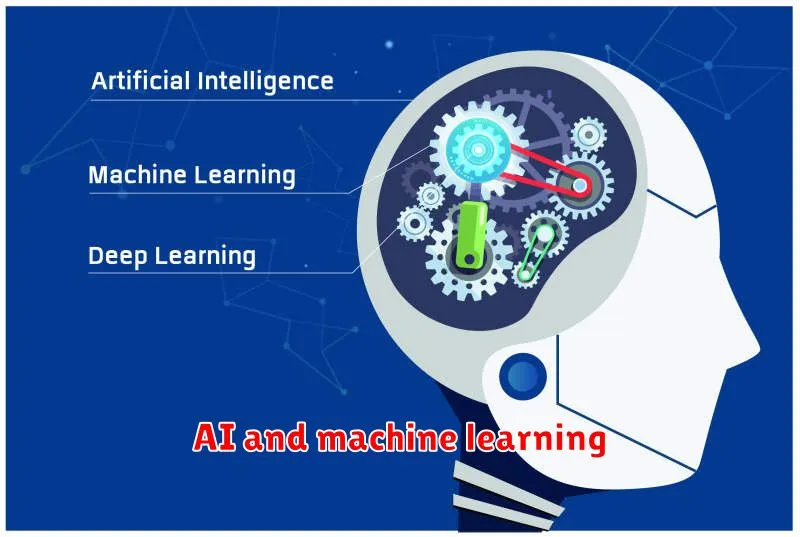

Understanding the Basics of AI and Machine Learning

Artificial intelligence (AI) and machine learning (ML) are two closely related fields that are rapidly transforming our world. While often used interchangeably, there are subtle differences between the two. Let’s break down the fundamentals.

AI encompasses the broad concept of creating intelligent machines that can perform tasks typically requiring human intelligence. This includes tasks like problem-solving, decision-making, and learning from data. Think of AI as the overarching goal – to create machines that can “think” like humans.

ML is a subset of AI that focuses on enabling computers to learn from data without being explicitly programmed. Instead of relying on predefined rules, ML algorithms identify patterns and insights from data, allowing them to make predictions or decisions. This learning process is achieved through training algorithms on vast amounts of data, allowing them to improve their accuracy over time.

Imagine teaching a child to recognize a cat. With ML, you wouldn’t explicitly program the rules for recognizing a cat. Instead, you would show the child countless images of cats and non-cats, letting the child identify patterns and learn to distinguish between them. This is similar to how ML algorithms learn from data. They identify patterns, make predictions, and improve their accuracy through experience.

Key Applications of AI and Machine Learning Across Industries

Artificial intelligence (AI) and machine learning (ML) are rapidly transforming industries across the globe. From healthcare to finance, manufacturing to retail, these technologies are revolutionizing how businesses operate, make decisions, and interact with customers. This article explores some key applications of AI and ML across various sectors, showcasing their immense potential to drive innovation and efficiency.

Healthcare

In the healthcare industry, AI and ML are being used to:

- Diagnose diseases: AI algorithms can analyze medical images like X-rays and MRIs to detect abnormalities, aiding in early diagnosis of conditions such as cancer and heart disease.

- Personalize treatment plans: ML models can predict patient outcomes based on individual health data, allowing doctors to tailor treatments for optimal results.

- Develop new drugs and therapies: AI is accelerating drug discovery by analyzing vast amounts of data to identify promising drug candidates.

Finance

The financial sector is embracing AI and ML for:

- Fraud detection: ML models can analyze transaction data to identify suspicious activities in real-time, preventing financial losses.

- Credit risk assessment: AI algorithms can evaluate loan applications and assess creditworthiness, making lending decisions more accurate and efficient.

- Personalized financial advice: AI-powered chatbots and robo-advisors can provide tailored financial recommendations based on individual needs and goals.

Manufacturing

AI and ML are transforming manufacturing processes by:

- Predictive maintenance: AI algorithms can analyze sensor data from machines to predict potential failures, reducing downtime and maintenance costs.

- Process optimization: ML models can optimize production lines by identifying bottlenecks and improving efficiency.

- Quality control: AI-powered vision systems can inspect products for defects, ensuring high quality and reducing manual labor.

Retail

AI and ML are enhancing the retail experience through:

- Personalized recommendations: AI algorithms can analyze customer data to suggest relevant products and offers, improving customer satisfaction and sales.

- Chatbots for customer service: AI-powered chatbots can provide instant customer support, answering queries and resolving issues efficiently.

- Inventory management: ML models can predict demand and optimize inventory levels, reducing stockouts and overstocking.

The applications of AI and ML extend far beyond these examples, impacting various other industries such as transportation, education, and energy. As these technologies continue to evolve, their impact on our lives and the world around us will only grow more significant.

Open-Source AI and Machine Learning Libraries and Frameworks

The world of artificial intelligence (AI) and machine learning (ML) is rapidly evolving, driven by the power of open-source tools. These libraries and frameworks offer developers access to cutting-edge algorithms, pre-trained models, and a vibrant community of contributors. This guide explores some of the most essential open-source tools for unlocking the potential of AI and ML.

TensorFlow

Developed by Google, TensorFlow is a powerful and versatile library for numerical computation, especially in the realm of deep learning. Its flexible architecture allows for deployment across various platforms, from CPUs to GPUs, and even specialized AI hardware.

PyTorch

Another leading deep learning library, PyTorch, is known for its ease of use and dynamic computational graph. Its intuitive Pythonic interface and strong community support make it a favorite for research and development.

Scikit-learn

For classical machine learning tasks, Scikit-learn is the go-to library. It provides a comprehensive set of tools for tasks like classification, regression, clustering, and dimensionality reduction. Its simplicity and efficiency make it suitable for both beginners and experienced practitioners.

Keras

Keras is a user-friendly API that sits atop TensorFlow, Theano, or CNTK. It simplifies the process of building and training deep learning models, making it accessible even for those without extensive experience.

Apache MXNet

Apache MXNet is a highly scalable and efficient library that excels in distributed training. Its modular design allows for flexibility in deployment across diverse hardware platforms.

OpenCV

OpenCV (Open Source Computer Vision Library) is widely used for computer vision tasks such as object detection, image processing, and video analysis. It provides a vast collection of algorithms and tools for working with visual data.

These are just a few examples of the many powerful open-source AI and ML libraries available. By leveraging these tools, developers can unlock the full potential of these technologies, driving innovation across various industries.

Cloud-Based AI and Machine Learning Platforms

In today’s data-driven world, artificial intelligence (AI) and machine learning (ML) are transforming industries. These technologies offer powerful tools for analyzing data, automating processes, and gaining valuable insights. To harness the full potential of AI and ML, organizations often turn to cloud-based platforms, which provide a flexible and scalable environment for developing and deploying AI models.

Cloud-based AI and ML platforms offer a range of benefits, including:

- Scalability and Flexibility: Cloud platforms allow organizations to easily scale their AI infrastructure up or down as needed, accommodating fluctuating workloads and data volumes.

- Cost-Effectiveness: By leveraging the shared resources of cloud providers, organizations can avoid the high upfront costs associated with building and maintaining their own AI infrastructure.

- Pre-built AI Services: Many cloud platforms offer pre-trained AI models and services that can be readily integrated into applications, saving time and effort in model development.

- Simplified Management: Cloud platforms handle tasks like infrastructure maintenance, software updates, and security, freeing up data scientists and developers to focus on AI model building.

Some popular cloud-based AI and ML platforms include:

- Amazon Web Services (AWS): AWS offers a comprehensive suite of AI and ML services, including Amazon SageMaker, Amazon Rekognition, and Amazon Comprehend.

- Microsoft Azure: Azure provides a wide range of AI and ML tools, including Azure Machine Learning, Azure Cognitive Services, and Azure Bot Service.

- Google Cloud Platform (GCP): GCP offers AI and ML services like Google Cloud AI Platform, Google Cloud Vision API, and Google Cloud Natural Language API.

These platforms provide the foundation for organizations to develop and deploy innovative AI and ML solutions, driving business growth and improving decision-making.

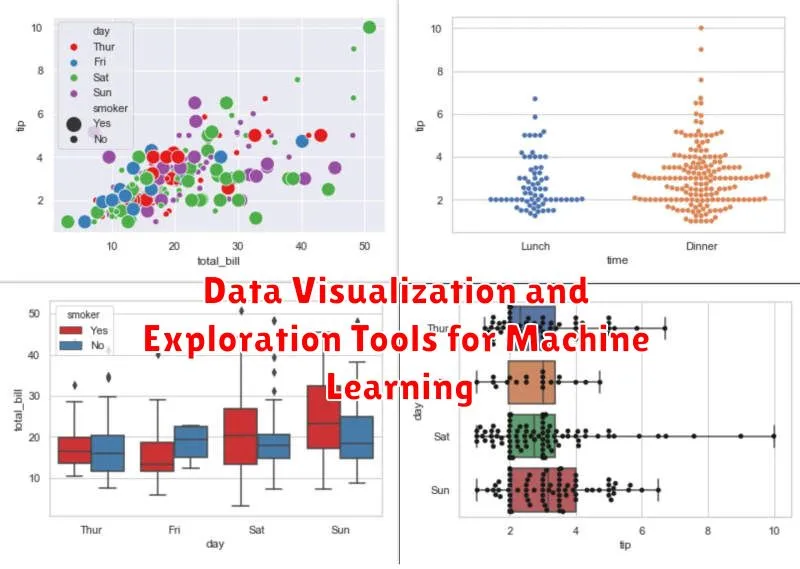

Data Visualization and Exploration Tools for Machine Learning

In the realm of machine learning, data is the lifeblood. Before you can build powerful algorithms or train sophisticated models, you need to understand your data thoroughly. This is where data visualization and exploration tools come into play. They empower you to gain insights, identify patterns, and uncover hidden relationships within your datasets, ultimately leading to more effective and accurate machine learning models.

The Power of Visualizing Data

Visualizing data isn’t just about creating pretty charts; it’s about unlocking the power of human perception. Our brains are wired to process visual information incredibly efficiently. By representing data graphically, we can:

- Spot anomalies and outliers: These can be indicative of data errors, interesting patterns, or even fraudulent activity.

- Identify trends and correlations: Visualizing data can reveal relationships between variables that might not be immediately apparent from looking at raw numbers.

- Communicate insights effectively: Visualizations make it easier to share complex data stories with stakeholders, fostering better collaboration and understanding.

Essential Tools for Data Exploration

The world of data visualization is vast, but some tools stand out for their ease of use, versatility, and powerful features. Here are a few popular options:

- Tableau: Renowned for its user-friendly interface and ability to create stunning interactive dashboards.

- Power BI: A robust business intelligence tool from Microsoft, offering a wide array of data visualization options.

- Python Libraries (Matplotlib, Seaborn, Plotly): Versatile and highly customizable libraries for creating static and interactive visualizations within Python coding environments. These are particularly popular among data scientists and machine learning practitioners.

- R Libraries (ggplot2): Similar to Python libraries, R libraries offer extensive capabilities for data visualization. ggplot2 is a popular choice for its elegant and expressive syntax.

Beyond Visualization: The Role of Exploratory Data Analysis (EDA)

Data visualization is a key part of exploratory data analysis (EDA). EDA encompasses a broader range of techniques for understanding your data, including:

- Summary statistics: Calculating measures like mean, median, standard deviation, and percentiles to get a basic understanding of data distribution.

- Data cleaning and preprocessing: Identifying and addressing missing values, outliers, and inconsistencies in your data.

- Feature engineering: Transforming and combining existing variables to create new features that might be more informative for your machine learning model.

By combining data visualization with other EDA techniques, you can gain a comprehensive understanding of your data, paving the way for building more effective and insightful machine learning models.

Natural Language Processing (NLP) Tools and Libraries

Natural Language Processing (NLP) is a field of Artificial Intelligence (AI) and Machine Learning (ML) that enables computers to understand, interpret, and generate human language. NLP has revolutionized how we interact with technology, leading to advancements in various domains, including chatbots, machine translation, sentiment analysis, and more. To leverage the power of NLP, developers and researchers rely on a wide range of tools and libraries that provide the necessary algorithms, functionalities, and resources. In this article, we will delve into some of the most essential NLP tools and libraries available today.

NLTK (Natural Language Toolkit) is a leading Python library for NLP. It offers a comprehensive collection of tools for text processing, including tokenization, stemming, lemmatization, part-of-speech tagging, named entity recognition, and more. NLTK’s vast corpus of pre-trained models and datasets makes it a valuable resource for both beginners and experienced NLP practitioners.

SpaCy is another popular Python library known for its speed and efficiency. It focuses on providing high-performance NLP models for tasks like named entity recognition, part-of-speech tagging, dependency parsing, and sentiment analysis. SpaCy’s intuitive API and optimized architecture make it ideal for building robust NLP applications.

Gensim is a Python library specializing in topic modeling and document similarity. It provides algorithms for techniques like Latent Dirichlet Allocation (LDA) and Word2Vec, allowing you to discover hidden themes and relationships within large text collections. Gensim’s capabilities are crucial for tasks such as document clustering, information retrieval, and recommendation systems.

Stanford CoreNLP is a comprehensive Java-based NLP toolkit developed at Stanford University. It offers a wide range of functionalities, including sentence splitting, tokenization, part-of-speech tagging, named entity recognition, coreference resolution, and sentiment analysis. Stanford CoreNLP is a powerful tool for researchers and developers who require a robust and well-documented NLP framework.

Hugging Face Transformers is a library that provides access to a vast collection of pre-trained NLP models, including BERT, GPT-2, and XLNet. These models are trained on massive datasets and can be fine-tuned for various downstream tasks. Hugging Face Transformers has democratized access to state-of-the-art NLP models, making it a game-changer for researchers and practitioners alike.

In addition to these libraries, there are other notable tools and resources available for NLP, such as:

- Google Cloud Natural Language API: A cloud-based service for text analysis tasks.

- Amazon Comprehend: A similar service offered by Amazon Web Services.

- OpenNLP: An open-source Java library for NLP tasks.

Choosing the right NLP tools and libraries depends on the specific task, the programming language you are using, and the level of expertise you have. By exploring these options and leveraging their functionalities, you can unlock the power of NLP and build intelligent applications that can understand and interact with human language in meaningful ways.

Computer Vision Tools and Libraries for Image Analysis

Computer vision, a field of artificial intelligence (AI) and machine learning (ML), empowers computers to “see” and understand images and videos. This powerful technology has revolutionized numerous industries, enabling automation, analysis, and insights previously unimaginable. To unlock the full potential of computer vision, a range of tools and libraries are essential. These tools provide developers and researchers with the building blocks to create sophisticated computer vision applications.

OpenCV, a widely used open-source library, is a cornerstone of computer vision development. It offers a comprehensive set of algorithms and functions for tasks such as image processing, object detection, and tracking. OpenCV’s cross-platform compatibility and robust performance make it an invaluable resource for various projects.

TensorFlow, another powerful framework, excels in deep learning applications. Its flexible architecture and extensive documentation enable the development of advanced computer vision models for image classification, segmentation, and more. TensorFlow’s ability to handle large datasets and complex models makes it suitable for real-world scenarios.

PyTorch, a deep learning library with a dynamic computational graph, provides a user-friendly interface for research and prototyping. Its Python-centric approach and focus on flexibility make it a popular choice for researchers and developers working on cutting-edge computer vision projects.

Scikit-Image, a library built upon the SciPy ecosystem, offers a comprehensive collection of image processing algorithms. Its emphasis on scientific computing and image analysis makes it an ideal tool for tasks such as image filtering, segmentation, and feature extraction.

Dlib, a C++ library, provides a range of tools for machine learning, including computer vision algorithms. Its focus on performance and accuracy makes it suitable for applications requiring real-time processing, such as facial recognition and object tracking.

These computer vision tools and libraries empower developers and researchers to build intelligent applications that leverage the power of image analysis. From medical imaging to autonomous driving, the possibilities are endless, making computer vision a transformative technology shaping the future.

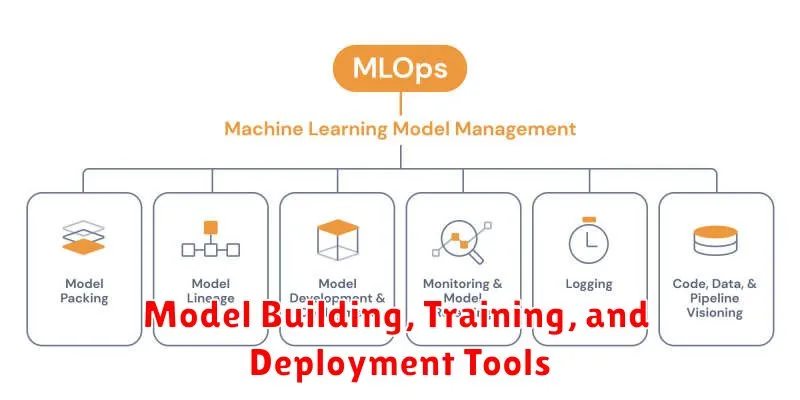

Model Building, Training, and Deployment Tools

Once you’ve decided on the best AI and Machine Learning models for your project, it’s time to get into the nitty-gritty details of building, training, and deploying them. This process involves using powerful tools designed to streamline the workflow and optimize your models. These tools are essential for turning your data into actionable insights and making your AI projects a reality.

Model Building Tools

Building models is where the real magic begins. Powerful tools help you define the architecture of your models, specify the algorithms, and experiment with different configurations. Here are some popular options:

- TensorFlow: An open-source library developed by Google that provides a comprehensive framework for building, training, and deploying machine learning models. Its flexibility and scalability make it suitable for various tasks, from image recognition to natural language processing.

- PyTorch: Another popular open-source deep learning library known for its ease of use and dynamic computational graphs. PyTorch is a favorite among researchers and developers for its flexibility and intuitive interface.

- Scikit-learn: A Python library that offers a wide range of machine learning algorithms, including classification, regression, clustering, and dimensionality reduction techniques. It’s a great starting point for beginners and provides a solid foundation for building various models.

Training Tools

Training is crucial for teaching your model to learn patterns and make predictions based on your data. This process involves feeding your model massive amounts of data and refining its parameters to achieve the desired performance. Training tools help manage this process effectively.

- Google Colaboratory (Colab): A cloud-based platform that provides free access to GPUs and TPUs, making it ideal for training computationally intensive deep learning models. Colab offers a convenient environment for writing and executing Python code, collaborating with others, and sharing your work.

- Amazon SageMaker: A fully managed machine learning platform offered by Amazon Web Services (AWS). It provides a comprehensive suite of tools for building, training, and deploying models, including a managed environment for training, hyperparameter tuning, and model monitoring.

- Azure Machine Learning Studio: A cloud-based machine learning service offered by Microsoft Azure. It allows you to create, train, and deploy machine learning models without the need for extensive coding, offering a user-friendly interface and a wide range of pre-built components.

Deployment Tools

Once your model is trained, you need to deploy it to make it accessible for use in real-world applications. Deployment tools help you package and serve your models, ensuring they can process new data and deliver predictions efficiently.

- Flask: A lightweight Python web framework that allows you to create REST APIs for deploying your models. It provides a simple way to expose your model’s predictions through web requests, making it readily available for integration into various applications.

- FastAPI: A modern, fast, and robust Python framework for building APIs. It combines the ease of use of Flask with the speed and efficiency of a type-hinted framework, making it ideal for high-performance applications.

- Docker: A platform that allows you to package your application and all its dependencies into a container. This ensures that your model runs consistently across different environments, simplifying deployment and making it portable.

By utilizing these powerful tools, you can streamline the entire process of building, training, and deploying your AI models. This allows you to focus on what matters most: creating innovative solutions and extracting valuable insights from your data.

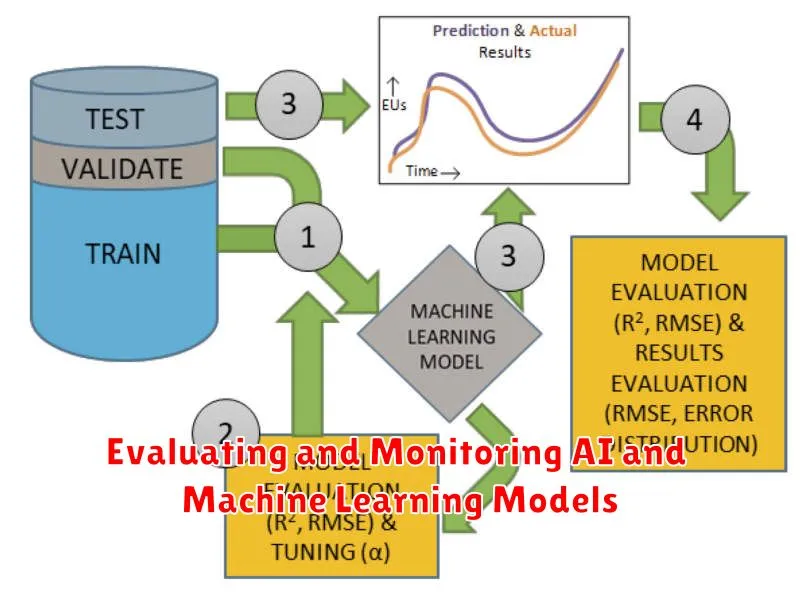

Evaluating and Monitoring AI and Machine Learning Models

Once you’ve built an AI or machine learning model, it’s crucial to assess its performance and ensure it continues to function effectively over time. This involves a process of evaluation and monitoring.

Evaluation focuses on assessing the model’s accuracy, bias, and overall effectiveness using various metrics. Some common evaluation metrics include:

- Accuracy: Measures how often the model correctly predicts the target variable.

- Precision: Measures the proportion of true positives among all predicted positives.

- Recall: Measures the proportion of true positives among all actual positives.

- F1 Score: A harmonic mean of precision and recall.

Monitoring involves continuously tracking the model’s performance in a production environment. This helps identify any drifts in data or changes in model behavior that might affect its accuracy. Key aspects of monitoring include:

- Data Drift: Monitoring changes in the distribution of input data over time.

- Model Performance Degradation: Tracking metrics like accuracy and recall to detect any decline in performance.

- Bias Detection: Identifying and addressing any unfair biases that may emerge in the model’s predictions.

Regular evaluation and monitoring are essential for building trust in AI and machine learning models. By continuously assessing and refining these models, you can unlock their full potential and ensure they deliver accurate, unbiased, and reliable results.

Ethical Considerations and Best Practices in AI and Machine Learning

As AI and machine learning (ML) become increasingly integrated into our lives, it’s crucial to consider the ethical implications of these technologies. While AI offers immense potential to solve complex problems and improve our lives, it’s essential to ensure that its development and deployment are guided by ethical principles.

Bias and Fairness

AI systems are trained on data, and if that data contains biases, the resulting system will likely reflect those biases. This can lead to unfair outcomes for certain groups of people. For example, an AI system used for hiring might unintentionally discriminate against women or minorities if the training data reflects historical hiring practices that were biased. It’s essential to carefully examine the data used to train AI systems and take steps to mitigate any potential biases.

Privacy and Security

AI and ML often require access to large amounts of personal data. Ensuring the privacy and security of this data is paramount. Data breaches can have severe consequences, leading to identity theft, financial loss, and reputational damage. Companies developing and deploying AI systems must implement robust security measures and adhere to data privacy regulations.

Transparency and Explainability

AI systems can sometimes make decisions that are difficult to understand. This lack of transparency can make it challenging to identify and address potential problems. It’s important to develop AI systems that are transparent and explainable. This means being able to understand how the system arrived at a particular decision and providing users with insights into the reasoning behind it.

Accountability and Responsibility

Determining who is accountable for the actions of AI systems can be complex. If an AI system makes a mistake or causes harm, it’s important to establish a clear chain of responsibility. This includes identifying the individuals or organizations responsible for developing, deploying, and maintaining the system. This accountability framework will help to ensure that any potential negative consequences are mitigated and that lessons are learned from past mistakes.

Best Practices for Ethical AI

Here are some best practices for developing and deploying AI systems ethically:

- Use diverse and representative datasets to train AI systems to minimize bias.

- Implement strong privacy and security measures to protect user data.

- Design systems that are transparent and explainable to foster trust and accountability.

- Establish clear guidelines for accountability and responsibility for AI system actions.

- Engage with stakeholders to gather feedback and address concerns.

By adhering to these ethical considerations and best practices, we can harness the power of AI and ML for good, ensuring that these transformative technologies benefit all of society.

The Future of AI and Machine Learning: Trends to Watch

Artificial Intelligence (AI) and Machine Learning (ML) are rapidly transforming various aspects of our lives, from how we interact with technology to how businesses operate. As these technologies continue to evolve, it’s essential to stay informed about the latest trends that will shape the future of AI and ML.

Here are some key trends to watch:

1. Democratization of AI and ML

AI and ML are becoming more accessible, thanks to the development of user-friendly tools and platforms. This democratization empowers individuals and organizations with limited technical expertise to leverage these technologies. Cloud-based AI platforms and pre-trained models are making it easier than ever to build and deploy AI applications. This trend will lead to a surge in AI adoption across industries, driving innovation and efficiency.

2. The Rise of Explainable AI (XAI)

As AI systems become more complex, understanding their decision-making processes is crucial. Explainable AI (XAI) aims to make AI models more transparent and interpretable, allowing users to understand why a specific output was generated. XAI is critical for building trust in AI systems and ensuring responsible AI development. It’s particularly important in domains where explainability is essential, such as healthcare and finance.

3. Edge Computing and AI

Edge computing involves processing data closer to the source, reducing latency and improving performance. Combining edge computing with AI enables real-time decision-making and analysis, even in environments with limited connectivity. This trend is transforming industries like manufacturing, healthcare, and autonomous vehicles, where quick responses and local data processing are critical.

4. The Growing Importance of Data Privacy and Security

As AI systems rely heavily on data, ensuring data privacy and security is paramount. Regulations like GDPR and CCPA are increasing awareness of data protection and ethical AI practices. Companies are investing in advanced security measures and data anonymization techniques to safeguard sensitive information. This trend will drive responsible AI development and foster trust between users and AI systems.

5. The Rise of AI-powered Automation

AI and ML are driving automation across industries, from customer service to manufacturing. This trend is transforming the workplace, creating new job opportunities while automating repetitive tasks. The adoption of AI-powered automation will continue to accelerate, leading to increased efficiency, productivity, and cost savings.

The future of AI and ML is bright, with these trends shaping the way we live, work, and interact with technology. As these technologies continue to evolve, it’s crucial to stay informed about the latest advancements and embrace the opportunities they present.